Samsung launches Galaxy S24 series with Galaxy AI, a gradual but significant evolution in AI

Samsung has just lifted the veil on its new Galaxy S24 Series intended for professionals and equipped with artificial intelligence capabilities, Galaxy AI. The Korean relied on its partnership with Google Cloud, including Gemini Pro and Imagen 2 technologies. This collaboration means that Galaxy S24 smartphones use Google’s cloud infrastructures to perform AI tasks. These infrastructures provide the computing power needed to run sophisticated AI models that would be too demanding for the capabilities of a smartphone.

The Galaxy S24 family (S24 Ultra, S24+ and S24) thus benefits from artificial intelligence “capabilities” thanks to Galaxy AI. The S24 series consists of the Galaxy S24 Ultra, S24+ and S24. The new features make it possible to simplify everyday life, communicate without borders and reinvent the web experience on mobile.

An integration that takes place on several levels, affecting key aspects of smartphone use, such as communication, organization, search and photography. These features represent a notable advancement in the smartphone user experience, with particular attention paid to communication, organization, search and content creation.

Features enhanced by AI

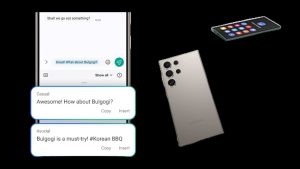

Communication functions greatly benefit from AI with capabilities like instant translation and interpreter. These tools enable real-time translation of telephone conversations and face-to-face dialogues into multiple languages. In addition, Message Assistant and Samsung Keyboard provide assistance with message wording and real-time translation. Their operation relies on an internet connection to access language processing algorithms and linguistic databases.

In the area of organization, the Notes Assistant built into Samsung Notes uses AI to generate summaries and structure notes efficiently. Likewise, the Transcription Assistant is capable of transcribing, summarizing and translating audio recordings.

Online search and discovery is also enhanced with the “Circle to Search with Google,” allowing users to search for information online by selecting items on the screen.

Finally, photography and creation are enriched by the ProVisual Engine, a set of AI-powered tools designed to improve photography. This includes AI-based editing and editing suggestion features. In addition, Generative Retouching, which fills in missing parts of the background and moves objects in images, uses AI. All of these applications require an internet connection to access search databases, Google image processing algorithms and access to AI models for speech and image processing.

While waiting for a real integration of inference

Despite significant progress, this evolution towards AI is only a first step towards the integration of true AI into smartphones. It’s important to understand the difference between an AI-based feature and inference built into a device. AI-based features are the simplest form of AI integration in smartphones used so far.

Because although manufacturers have often mentioned AI to qualify certain functions, these are not based on real-time inference. Rather, they are simple, monofunctional algorithms such as facial recognition or automatic translation, even if they are based on learning. For example, facial recognition is often presented as AI, but it is actually based on an algorithm that compares the user’s facial landmarks to those in its database.

True inference is more complex and requires more computing power. It can be equipped with the capacity to evolve through learning and adapt over time, which allows it to improve its performance over time. We are not there yet.

AI-based inference is a resource-intensive technology, which means chip manufacturers and designers must improve the computational capabilities (processor, memory, specialized coprocessors like NPU or Neural Processing Unit) to run algorithms of inference. Although newer smartphones have the computing power to perform simple AI functions, it will still be a few years before they can run real, more complex AI engines.

Understandable caution from manufacturers

This explains the need for a mobile network connection for these AI functionalities. Smartphones must be able to access computing resources and data in the cloud. AI models, especially those used for natural language processing and image analysis, require a considerable amount of computing power and data storage, which are available on cloud servers rather than on the device itself. In addition to computing capabilities, current smartphones do not have sufficient battery life to support the intensity of inference computation.

Finally, the integration of AI through software engineering and the increase in processing capabilities of smartphones can explode the cost price and undermine the fragile balance of the current business model, and, ultimately, on the sale price. Smartphone manufacturers must invest in research and development, as well as in training their teams.

Additionally, they must invest in software engineering to meet inference requirements. Costly initiatives for a balanced business model. It is all these limitations which explain the caution of smartphone manufacturers. They explain the fact that most of the AI functions of the new Galaxy require an effective connection to the mobile network and a Samsung account, to be able to access the necessary computing resources and databases, located on remote servers.